Overview

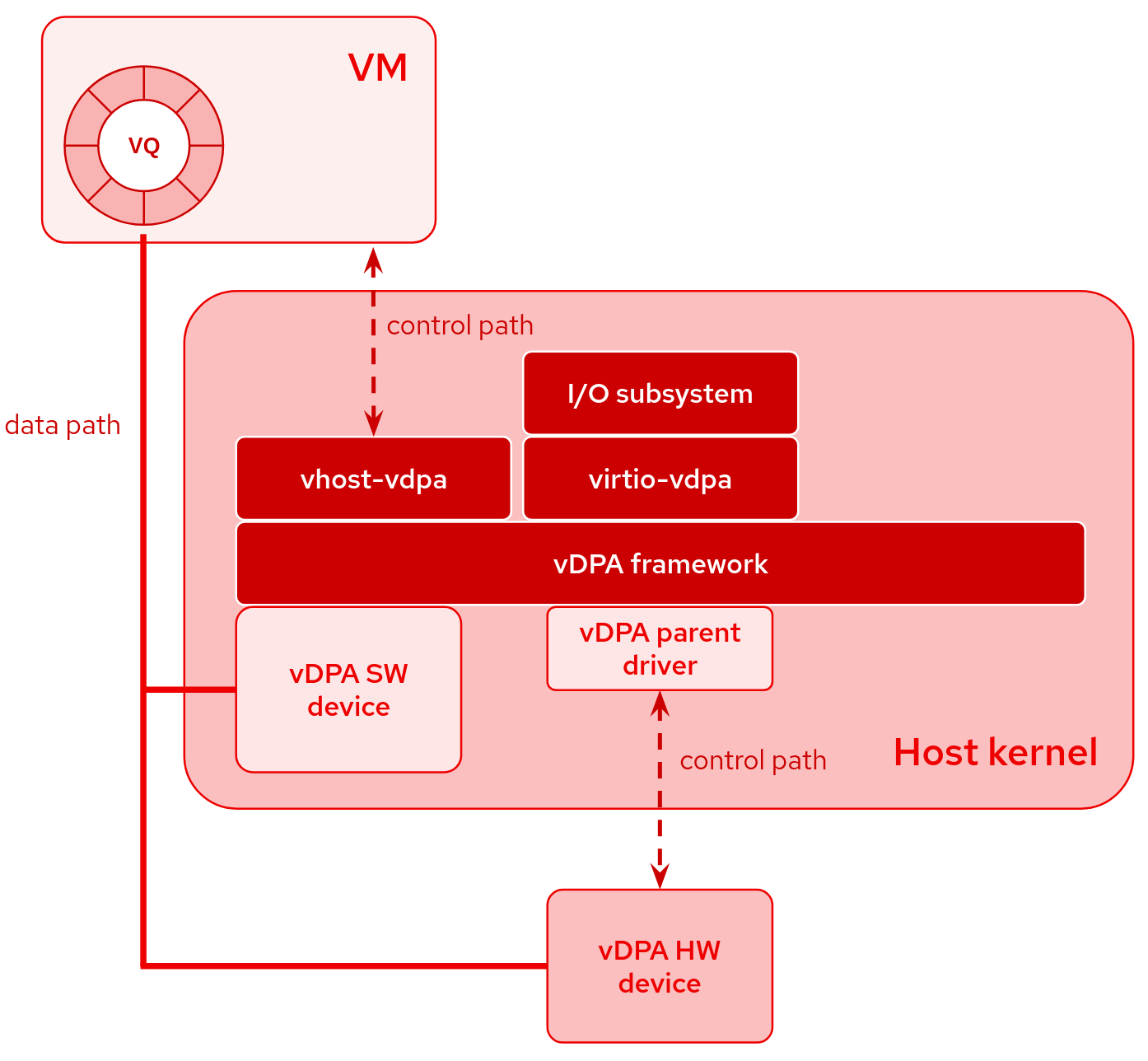

A vDPA device means a type of device whose datapath complies with the virtio specification, but whose control path is vendor specific.

vDPA devices can be both physically located on the hardware or emulated by software.

A small vDPA parent driver in the host kernel is required only for the control path. The main advantage is the unified software stack for all vDPA devices:

- vhost interface (vhost-vdpa) for userspace or guest virtio driver, like a VM running in QEMU

- virtio interface (virtio-vdpa) for bare-metal or containerized applications running in the host

- management interface (vdpa netlink) for instantiating devices and configuring virtio parameters

Status

Available upstream since:

- Linux 5.7+

- QEMU 5.1+

- libvirt 6.9.0+

- iproute2/vdpa 5.12.0+

Links

Blog Posts

- Hands on vDPA: what do you do when you ain’t got the hardware v2 (Part 2)

- Hands on vDPA: what do you do when you ain’t got the hardware v2 (Part 1)

- Introducing VDUSE: a software-defined datapath for virtio

- Hardening Virtio for emerging security use cases

- Hyperscale virtio/vDPA introduction: One control plane to rule them all

- Hands on vDPA: what do you do when you ain’t got the hardware

- vDPA kernel framework part 3: usage for VMs and containers

- vDPA kernel framework part 2: vDPA bus drivers for kernel subsystem interactions

- vDPA kernel framework part 1: vDPA bus for abstracting hardware

- Introduction to vDPA kernel framework

- How vDPA can help network service providers simplify CNF/VNF certification

- vDPA hands on: The proof is in the pudding

- How deep does the vDPA rabbit hole go?

- Achieving network wirespeed in an open standard manner: introducing vDPA

Presentations

- Hyperscale vDPA @ KVM Forum 2021

- VDUSE - vDPA Device in Userspace @ KVM Forum 2021

- vdpa-blk: Unified Hardware and Software Offload for virtio-blk @ KVM Forum 2021

- Bringing vDPA to Life in Kubernetes @ DevConf.cz 2021

- vDPA Support in Linux Kernel @ KVM Forum 2020

- vDPA: on the road to production @ DPDK “Virtual” Userspace Summit 2020

- vdpa: vhost-mdev as a New vhost Protocol Transport @ KVM Forum 2018

Community

Mailing lists:

- Linux’s vDPA framework: virtualization@lists.linux-foundation.org (listinfo)

Frequently Asked Questions

Which hardware vDPA devices are supported in Linux?

Currently upstream Linux contains drivers for the following vDPA devices:

- virtio-net

- Intel IFC VF vDPA driver (

CONFIG_IFCVF) - Mellanox ConnectX vDPA driver (

CONFIG_MLX5_VDPA_NET)

- Intel IFC VF vDPA driver (

Which software vDPA devices are supported in Linux?

There is an ongoing effort to support the vdpa-blk in-kernel software device.

Currently there are software vDPA device simulators supported in the Linux

kernel.

What are in-kernel vDPA device simulators useful for?

The vDPA device simulators are useful for testing, prototyping, and

development of the vDPA software stack. Starting with layers in the kernel

(e.g. vhost-vdpa), up to the VMMs.

The following kernel modules are currently available:

- vdpa-sim-net (

CONFIG_VDPA_SIM_NET)- vDPA networking device simulator which loops TX traffic back to RX

- vdpa-sim-blk (

CONFIG_VDPA_SIM_BLK)- vDPA block device simulator which terminates I/O requests in a memory buffer (i.e. ramdisk)

How to use the iproute2/vdpa tool?

The vdpa management tool can be used to communicate with the vDPA framework

in the kernel using netlink vDPA API. It allows to create and destroy new

devices, and control their parameters.

An example using the in-kernel simulators:

# Load vDPA net and block simulators kernel modules

$ modprobe vdpa-sim-net

$ modprobe vdpa-sim-blk

# List vdpa management device attributes

$ vdpa mgmtdev show

vdpasim_blk:

supported_classes block

vdpasim_net:

supported_classes net

# Add `vdpa-net1` device through `vdpasim_net` management device

$ vdpa dev add name vdpa-net1 mgmtdev vdpasim_net

# Add `vdpa-blk1` device through `vdpasim_blk` management device

$ vdpa dev add name vdpa-blk1 mgmtdev vdpasim_blk

# List all vdpa devices on the system

$ vdpa dev show

vdpa-net1: type network mgmtdev vdpasim_net vendor_id 0 max_vqs 2 max_vq_size 256

vdpa-blk1: type block mgmtdev vdpasim_blk vendor_id 0 max_vqs 1 max_vq_size 256

# As above, but using pretty[-p] JSON[-j] output

$ vdpa dev show -jp

{

"dev": {

"vdpa-net1": {

"type": "network",

"mgmtdev": "vdpasim_net",

"vendor_id": 0,

"max_vqs": 2,

"max_vq_size": 256

},

"vdpa-blk1": {

"type": "block",

"mgmtdev": "vdpasim_blk",

"vendor_id": 0,

"max_vqs": 1,

"max_vq_size": 256

}

}

}

iproute2/vpda man pages: